Voice Assistants 101: A Look at How Conversational AI Works

Adriana Campoy and Sebastián Sassi

August 28, 2019

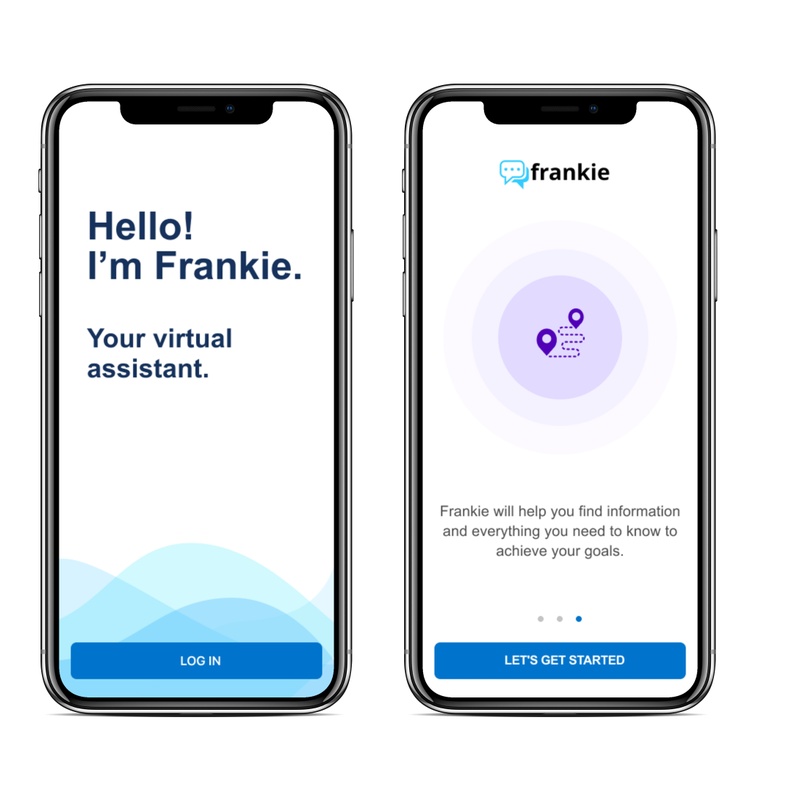

The prevalence of conversational AI in daily life is growing rapidly. Smart speakers and smartphone voice assistants have become normal parts of how many people look up information, perform tasks, and organize their schedules. But how exactly does this technology work? In this post, we'll walk through the typical steps a voice assistant takes to fulfill a spoken command and some of the software components that make it possible. We'll also take a look at some of the ways in which a voice assistant can provide real value for users.

Step 1: Hearing the Wake Word

A voice assistant needs to know when to start listening for a command. A wake word like "Alexa" or "OK Google" activates the assistant and serves as a cue that a command will follow. But how does a voice assistant know that the wake word has been said?

Voice assistants "hear" the wake word through a device's microphone. A smart speaker like Amazon Alexa is in effect always listening: it records audio in three-second segments and immediately deletes the recording if it has not detected the wake word. 1 Other kinds of voice assistants may listen for a wake word only when the app is open or if the user presses a button.

Voice assistants use machine learning to discern utterances, and this includes the wake word. During development, voice assistants are given many different examples of the spoken wake word. This training process teaches the voice assistant to recognize the wake word regardless of variables like gender, accent, and background noise. 2 Voice assistants designed for more specific purposes can even learn to recognize a particular human voice, which can serve as a method of authentication.

Step 2: Understanding the Voice Command

Once the assistant has been activated, the user can ask a question or request that the voice assistant perform a task. Machine learning allows the voice assistant to filter out background noise and comprehend a wide variety of different types of voices. The voice assistant applies natural language processing (NLP) to understand what the user said, first by using speech-to-text conversion software to enter the user's request into the system, and then by employing named-entity recognition (NER) to extract important information. If the assistant doesn't understand the question or needs more information to be able to complete the task, it will formulate a follow-up question and use text-to-speech software to ask for clarification or more specifics.

Step 3: Fulfilling the Request

How this part works can vary depending on the situation, but let's start with a general overview. Based on the information it has extracted, the voice assistant can answer the user's question or do the appropriate task by searching the internet or connecting to other apps like their calendar or email. Machine learning enables a voice assistant to discover the user's preferences. The more the user enlists the help of the voice assistant, the better it gets at predicting what they need.

While voice assistants for personal use are limited to what they can find in a Google search or synced apps, custom voice assistants built for business use can access specialized data that makes users' jobs easier. Voice assistants have the potential to significantly boost productivity in a wide range of industries by offering access to all the data users need to do their job in one easy-to-use platform. In addition, machine learning capabilities allow voice assistants to personalize workflows and offer helpful insights at the right time.

But how exactly does a voice assistant fulfill a voice command? Let's go a bit more in depth and look at some of the key capabilities that affect how an assistant responds.

Handling the Context

It's essential for a voice assistant to have a strong grasp on context. This includes understanding the conditions in which someone uses the assistant. For example, a good voice assistant should be able to detect when the user is driving and automatically switch to voice-only mode. This means that when it gives a response, it won't use visuals like images, graphs, and maps, which can distract the user when they should keep their eyes on the road.

Another key part of understanding context is remembering previous information that the user has provided and using it to make inferences about what the user means as the conversation continues. For example, a user may ask "What's John Bennet's address?" receive an answer, and then ask "How far is that from my house?" A quality voice assistant should be able to infer that "that" refers to John Bennet's address, and that the answer to this question should include not only the distance, but the amount of time it takes to drive there from the user's house. Machine learning is crucial to these kinds of processes.

Reasoning

Machine learning is also fundamental to a voice assistant's ability to make logical deductions based on simple calculations. This capability makes voice assistants especially useful in a work context, as users can optimize their productivity and gain valuable insights.

To illustrate this, let's consider a voice assistant designed for a sales team. A user might ask it to compare the number of sales Jane and Tim closed over the last month. The voice assistant can not only provide the requested data, but also note that the majority of sales were closed using a credit card and that Tim only accepts debit, check, or cash. When the voice assistant presents this information, it can offer the insight that accepting credit cards will likely increase Tim's sales. The ability to reach such conclusions on its own enables a voice assistant to provide great value with minimal time and effort expended by the user.

Robotic Process Automation (RPA)

Fulfilling a voice command can mean carrying out an entire multi-step process. Robotic process automation (RPA) technology enables voice assistants to complete a range of tasks from the beginning to the end of a workflow.

For example, Google Duplex allows voice assistants to make phone calls in order to reserve a table at a restaurant, book an appointment at a hair salon, or inquire about business hours. The voice assistant can complete the entire process without the intervention of a human user, since a recurrent neural network expertly handles all the complexities of a natural conversation with the person at the other end of the call. 3

Other applications of RPA include having a voice assistant send automatic follow-up emails or check UPS, FedEx, or USPS for the status of a shipment. Custom voice assistants for enterprises can even take care of mundane and time-consuming internal company processes.

Step 4: Reminders and Notifications

A voice assistant's job is not over when an interaction with the user finishes. Users can set a voice reminder for a later time. A mobile voice assistant can also send push notifications to alert the user before appointments and important events. It can even let the user know to leave early for a meeting if there's a traffic jam.

Voice and push notifications are especially handy in a professional setting. Smart assistants can alert users when there are anomalies in the data such as a sudden drop in sales, a spike in costs, or an issue with company operations.

Knowing how voice technology works can help us understand its potential for making our daily tasks easier. While voice assistants already have a strong presence in many people's personal lives, they also present a great opportunity for companies to unlock productivity and vastly improve the user experience for employees. Early adopters have the most to gain from this competitive advantage.

At sophilabs, we're experts in voice technology. We have experience building apps that use conversational AI and machine learning to fulfill specific and varied user needs. It's exciting to see the complex problems voice assistants are capable of solving, and we're looking forward to tackling more challenges with voice technology.

-

Josh Hendrickson, "How Alexa Listens for Wake Words," How-To Geek, July 15, 2019. ↩

-

Rowan Trollope, "7 Things You Didn't Know About Wake Words," Medium, November 29, 2017. ↩

-

Yaniv Leviathan and Yossi Matias, "Google Duplex: An AI System for Accomplishing Real-World Tasks Over the Phone," Google AI Blog, May 8, 2018. ↩

An AI and Machine Learning Glossary

AI presents opportunities for many industries, and sophilabs is excited to be a part of this growing field. We've put together this short glossary to define some of the most commonly used terms in the field.

How Spending a Day in the Life of a User Helped Us Build a Product that Users Love

Sebastián Sassi tagged along with one of our client's sales representatives to see what their typical workday is like so we could anticipate their daily challenges and offer streamlined solutions.

6 Fascinating Things You Can Do with Conversational AI Technology

As conversational AI becomes a normal part of our lives, we can imagine endless possibilities. Here are 6 industries that could greatly benefit from Conversational AI technology right now.

Photo by sophilabs.

Categorized under research & learning.We’d love to work with you.

We treat client projects as if they were our own, understanding the underlying needs and astonishing users with the results.